Traffic drops can be stressful and frustrating, but they don’t have to be mysterious. This resource will give you a framework to identify the cause of a decrease in pageviews from search and take steps to address any underlying issues.

Is it actually a search traffic decrease?

Start with Google Analytics to verify that the traffic decrease you’re seeing is related to search.

In GA4, you can find this under Reports > Lifecycle > Acquisition > Traffic Acquisition.

Locate the entry for Organic Search, and use the date filters to compare pageviews or sessions to previous time periods (including the same time period in the previous year). Verify that your search traffic actually decreased — if not, move on to other traffic sources until you isolate the traffic source contributing to your traffic drop.

How significant was the decrease?

Once you confirm that search traffic actually dropped, it’s time to gauge the significance of the fluctuation. A large search traffic fluctuation isn’t necessarily a specific percentage of your traffic, but a sustained decrease in search traffic over a number of days. If traffic recovers quickly, don’t worry about it.

Use Google Search Console to narrow in on the impact

Now, you know whether or not the traffic decrease you’re seeing is related to search. Move to Google Search Console to narrow in on the exact impact. Did search impressions or clicks drop? Which pages were impacted?

4 main causes for search traffic decreases

When you’re seeing search traffic decrease, it’s almost always due to one of the following reasons:

- Technical issues or site changes

- Seasonal / event-based shifts

- Algorithmic / SERP design fluctuations

- Meaningful competitive changes

We’ll explore this four-step framework from Search Engine Journal and share tips for recovery.

1. Technical issues or site changes

It’s always good to start by considering any potential technical issues or recent changes to your site. Technical issues don’t magically happen. There was always an active action, whether it was something you did, or a third-party vendor, tool, or service.

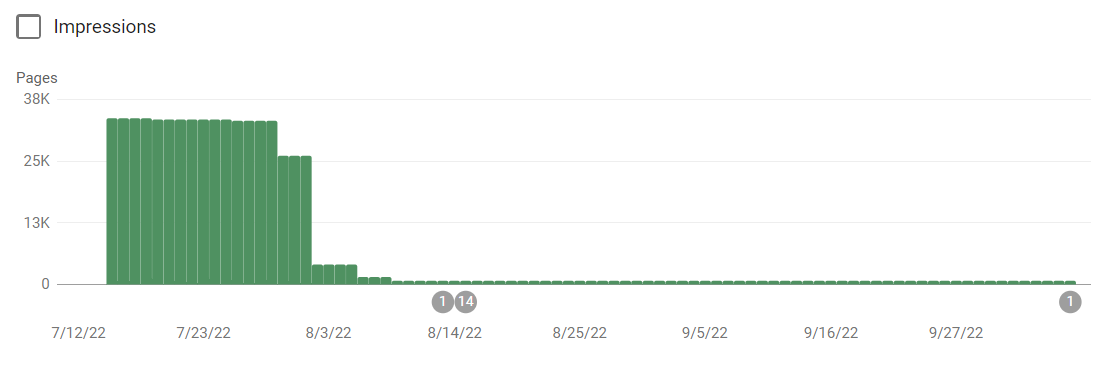

Search traffic drops related to technical issues or changes always look like a “cliff” in Google Search Console. A major site change relates to a significant impact on search results. The cliff is specific to a point in time, and you can generally track it back to an event like a site design change, hosting change, outage, or front-end change.

Avoiding “The Cliff”

When you launch something new for your site, the best approach is to release updates in small chunks, testing and measuring impact and then expanding based on data.

If you see negative impacts, this approach allows you to revert each small change and iterate until the final solution works out with far greater confidence.

Addressing “The Cliff”

If you haven’t taken an iterative approach, the second best option is to roll everything back and re-implement each change separately.

Sometimes, it’s not possible to revert your changes, you don’t want to revert them, or the changes were outside your control — you simply have to move forward. This is the trickiest traffic decrease to fix since you need to identify what happened at every level of the site.

Is it an access issue? Is it a crawling issue? Is it an indexing issue? Or is it ultimately a ranking issue? Evaluate each of those paths independently, or engage technical help at this point.

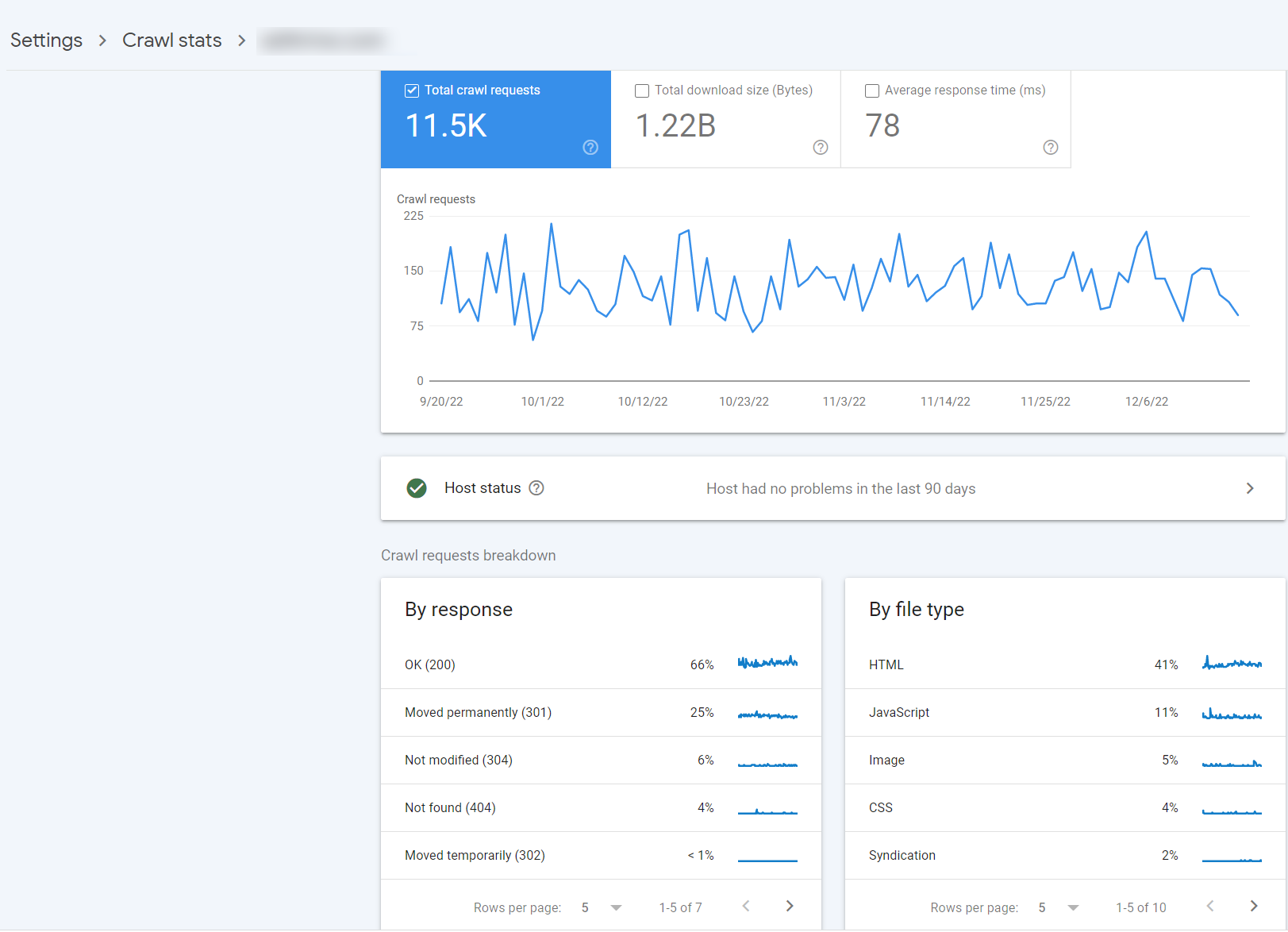

Access and crawl issues

Can Google/visitors get to your site? Access issues are related to robots.txt files, htaccess, robots directives, server issues, hosting issues (4-500 codes), or CDN issues. Google can’t get to your site, so your site can’t rank.

Check your sitemaps and log files to see these types of issues and compare them to a historical baseline. If the logs look fine, there are likely no crawl issues.

- Is the crawl different?

- Is the distribution of error codes different?

- Did errors impact your important pages that are seeing traffic drops?

- Has the amount of time it takes to download pages changed?

- Has the amount of data being consumed changed?

- Does this amount of data line up roughly with the size of your page?

Some basic tools like Screaming Frog and Semrush offer limited log file analysis, and Google Search Console provides Crawl Stats.

For more sophisticated analysis, consider hosted solutions like Botify, Logz.io, or an AWS-hosted ELK stack.

Indexing issues

Is your page in the search results? If your log files look normal, but your pages still aren’t appearing in search results like before, look at indexing issues. Indexing issues can be related to no-index directives, duplicate content or rel canonical issues, and sometimes JavaScript framework issues.

Ranking issues

If you know the drop is due to a technical change, but you’ve ruled out access/crawl issues and indexing issues, then ultimately, it’s a ranking issue. Review Core Web Vitals for pages that dropped in relation to their competitors, and look at schema markup.

Ultimately, you may find that rankings change when you make major technical changes to your site with no easy recourse. When you change the core dynamics (domain, URLs, back-end site architecture, front-end design) of how you deliver content to users and search engines, you recalibrate that dynamic and may never reach the previous balance point.

Should you start with an audit?

When you see “the cliff,” it can be tempting to start with a full site audit and identify dozens of things you could improve for SEO. However, an audit can be a distraction at this point.

You’re not looking for a lot of small things to improve — you’re looking for the one change that happened at the point of the traffic drop.

If you have the results of a technical audit from before the change, you can compare before and after. But starting with an audit after the change can highlight many things that may not be the root cause of your traffic decrease.

2. Seasonal or event-based shifts

If you’ve ruled out a technical issue with your site impacting search traffic, consider whether the traffic shift is related to seasonal patterns or changes based on specific events.

Understanding expected traffic

Seasonality can make it challenging to identify true traffic drops, so use percentages to understand if a post is truly receiving a smaller share of traffic.

For example, let’s say a particular post typically receives 50,000 pageviews in a week. Overall, your site receives 500,000 pageviews, so that post receives 10% of your traffic in an average week.

When you see a traffic decrease, determine whether the post’s traffic for that week was above or below what you expected.

Is it seasonality?

Plot events on a calendar that align and impact your business and map it back to your historical traffic to understand when seasonality impacts your site traffic. Consider this when you’re analyzing expected traffic, and use historical data in Google Analytics to compare to the same week in the previous year.

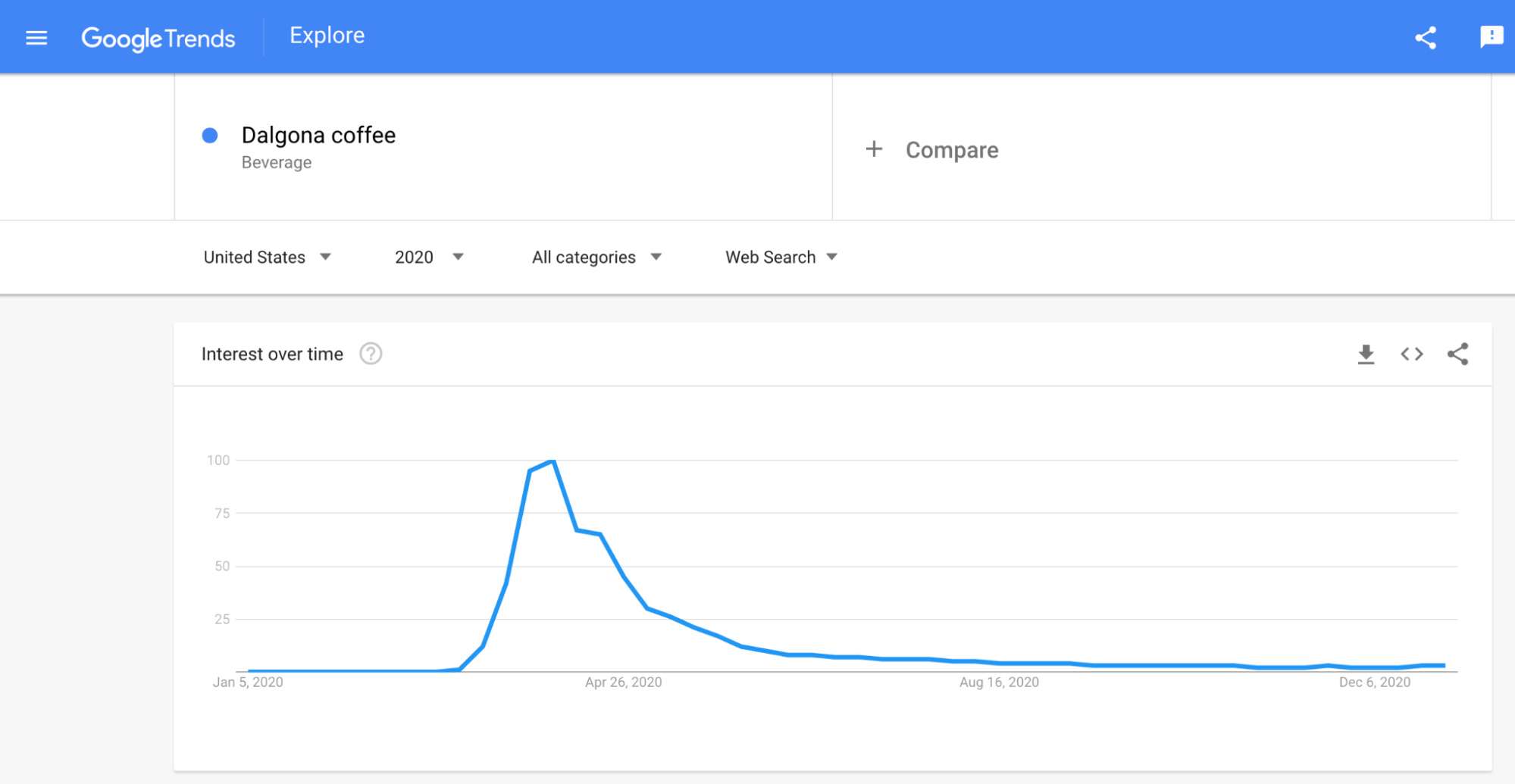

Is it a shift in event-based traffic?

Single event-based traffic is easy to spot in your data. Look at the post’s share of pageviews for each week in relation to overall traffic in your historical data.

If a post recently spiked unusually high (perhaps due to a viral content trend), a traffic “decrease” may simply be an end to that event-based traffic.

For example, in 2020, Dalgona coffee was a short-lived trend with massive traffic spikes that returned to normal levels within a few months. Google Trends is a great way to understand seasonal fluctuations.

3. Algorithmic or SERP design fluctuations

Google makes changes to the search algorithm and SERP layout all the time. Some updates have names and announcements, but hundreds of small fluctuations happen behind the scenes each year.

Sometimes these updates are minor, specific to a region, or specific to a particular type of search result. The big updates are announced or confirmed by Google here.

Track Google’s named/announced updates

When you have advance notice or confirmation of a Google update, note the dates and measure any impact on your search traffic a few weeks after the rollout ends.

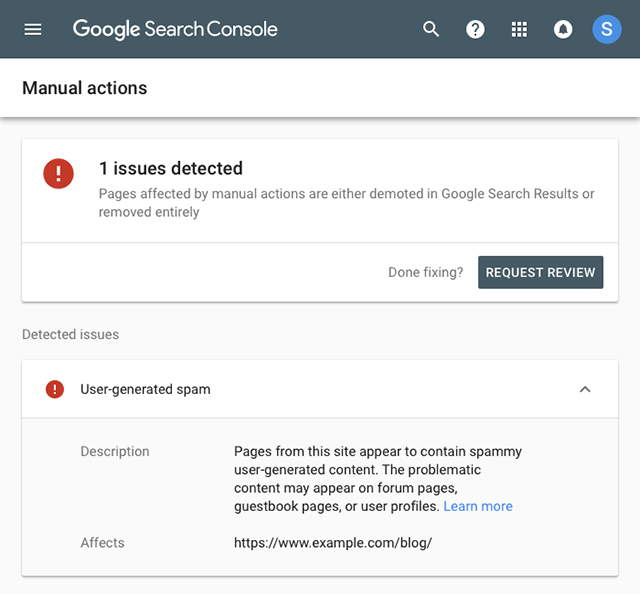

Question 1: Is there a manual action in Google Search Console?

Per Google, “if a site has a manual action, some or all of that site will not be shown in Google search results.”

Manual actions levied against content on your site are very rare but easy to identify or rule out. You’ll see any manual actions specifically laid out in Google Search Console for issues like user-generated spam, problems with structured data, unnatural links to or from your site, or keyword stuffing.

As the name implies, a manual action means that a human, not an algorithm, looked at the page to determine the issue and decided to remove the page from search results.

If you see an issue highlighted in the Security and Manual Actions section of your Google Search Console account, fix the issue and request a review through Google Search Console.

If you don’t see any issues highlighted in your Google Search Console account’s Security and Manual Actions section, you’ve ruled this out as a reason for your traffic drop.

Question 2: Where is the pageview impact?

If you’ve ruled out a manual action, next, identify if the pageview impact is localized to some subset of your content.

Typically, traffic doesn’t decrease on all pages equally – e.g., all pages lost 25% of pageviews, causing a 25% decrease in your site traffic. Instead, traffic decreases dramatically on some pages, and those pages have an outsized impact on your site traffic.

Identify the exact pages with traffic decreases for closer analysis. What happened to those pages that didn’t happen to another group of pages? Is the traffic drop related to a loss of position in search results, loss of keyword coverage, or a shift in the SERPs?

Question 3: Did you lose your position in search results?

Loss of position happens to a specific keyword. It’s a very narrow scope.

Evaluate the results that have taken the spot your content used to hold in search results, and identify any content or search intent gaps between you and these new competitors.

For example, previously, you were in position two for a certain keyword, but now you’re in position four. That means the Google algorithm scoring system has changed how it values your page and now values a different piece of content for the second position.

It’s time for some detective work: what are your competitors doing differently that is suddenly being scored higher than before the algorithm update?

- Do the pages have a table of contents?

- Do the pages have a particular type of image?

- Do the pages have the same type of markup?

- Do the pages focus on a particular type of content?

- What else do the pages have in common?

Create a list of hypotheses. How are these pages answering the user’s question differently?

Start iterating on the pages that lost traffic, ideally starting somewhere in the middle of the group with your hypotheses.

Once you lock in the things driving growth again, combine them on the biggest pages.

Have patience and iterate until you are happy.

Question 4: Did you lose keyword coverage?

Loss of keyword coverage is a little more challenging to diagnose because it doesn’t relate to one specific keyword. Loss of keyword coverage happens to a cluster of keywords.

If the content that’s now showing up instead of yours is completely different, evaluate whether the search intent is more focused (narrow) or broad than your specific content.

Create new content to address the search intent for this keyword, or go back through your post and identify and address specificity that may not be necessary for the user’s search intent.

Example: broad and narrow search intent

For example, let’s say you used to rank for “apple pie recipe” with your “simple apple pie recipe” post. But now, when you visit the search results for “apple pie recipe”, none of the listings are that specific. Google has decided that “simple” isn’t a key concept when people do a broad search like “apple pie recipe.”

Now, you can go back to your content and decide if it’s too narrow. Is the specificity of “simple” critical to the user and the content?

Conversely, let’s say you used to rank for the broad keyword “apple pie recipe”, but you’re not winning that anymore. This could indicate that you need to narrow your keyword focus to something more specific, like “simple apple pie recipe.”

Question 5: Did the search results page change?

If you’ve ruled out a change in rankings, keyword coverage, and impressions, but you’re still seeing an impact on CTR and clicks, it’s time to look at the search results page.

Google often changes the search results page with different design elements or features that can affect CTR. When you see this happen, consider how your content can be included in all the variety that’s been added to the result page.

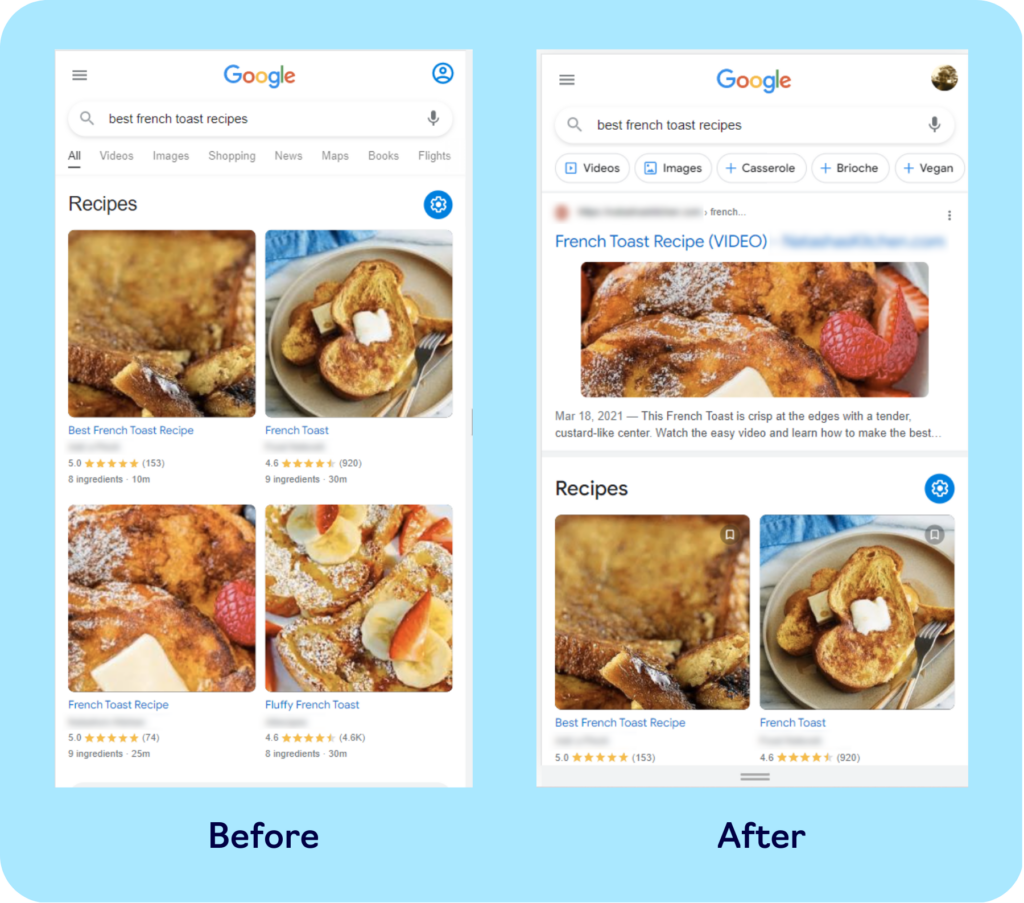

Example: a change in SERP design

In this example, the number one piece of content used to make up 25% of the page above the fold on mobile, but after the redesign, it now makes up 50% of the page.

Before, there were four results showing up above the fold. Now, there are only three. Number four is still number four, but now it’s below the fold with significantly lower CTR and clicks.

It’s easy to interpret this as “Google just doesn’t like my site,” when in reality, Google is just revising the results page to engage more users.

4. Meaningful competitive changes

The changes your competitors are making can be the hardest factor to identify, but third-party tools like Topic, Semrush, Ahrefs, and Moz can help you track who your competitors are, who’s moving up in the world, and what they’re doing with their content.

When you see ranking drops, watch for patterns in your competitors. Learn and innovate on your site using the best ideas of your competitors. The earlier you spot the new trend, the quicker you can recover lost traffic.

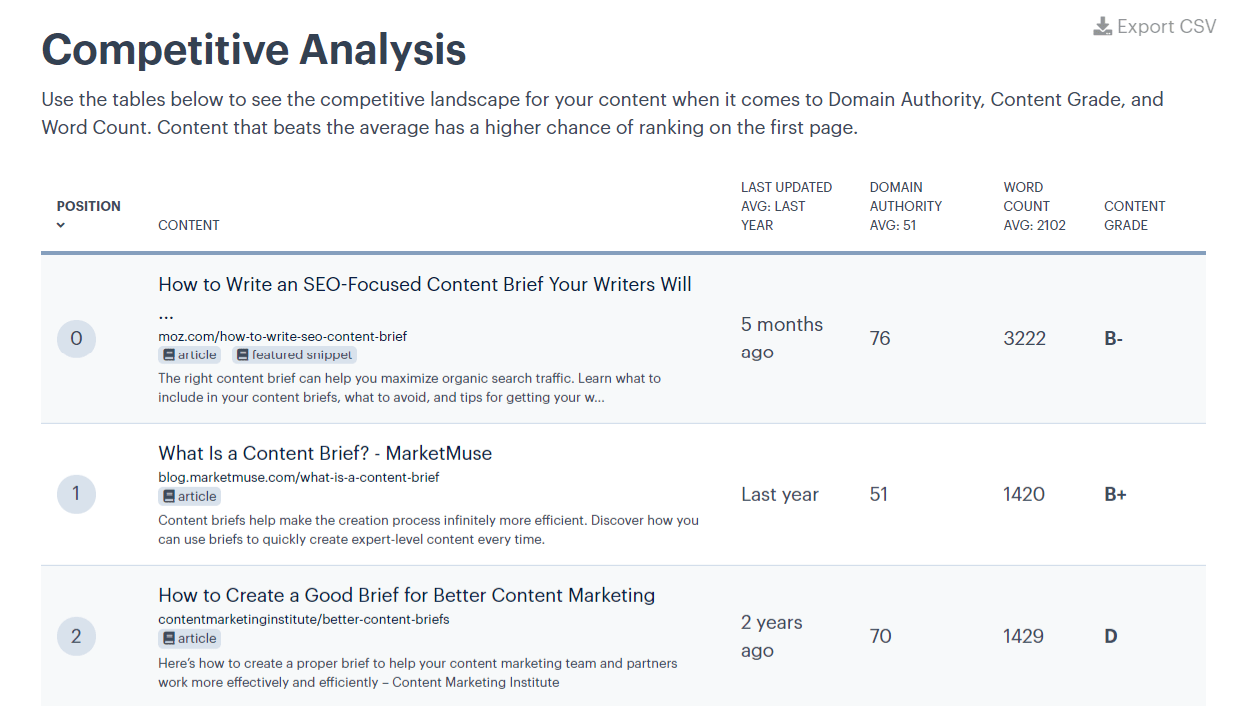

Use Topic as a competitor evaluation tool

You may already know that you have the industry’s leading SEO content optimization tool in your dashboard for free, but you might not realize just how powerful the competitive research capabilities are!

When you generate a content brief for a specific keyword you want to target or optimize for, Topic pulls together a wealth of competitive information about what content is ranking well for this keyword.

You can drill down to analyze how each competitor is structuring their content and search for patterns.

Is it backlinks?

Use a third-party tool like Moz, Ahrefs, Semrush, or Majestic to understand how many backlinks your competitors are getting compared to you.

Sometimes, no matter what you do on the content side, it won’t be enough to overcome a competitor with a much more authoritative backlink profile..

If you’ve maximized your content’s value, freshness, and structure, the next step is to analyze your competitors to look for a gap in backlinks.

Then, determine how you will build higher authority and increase awareness for your content over time. Meanwhile, explore slightly less competitive keywords using a waterfall keyword research strategy to regain your authority on this topic.

To recap, start by identifying the potential causes of a traffic drop.

Was it algorithmic? Was it a SERP design change? Is there something technically wrong with your site? Was there a seasonal or event-driven change in interest? Or did your competitors get stronger?

Once you’ve identified the cause, it’s time to trigger a workflow, gather all your data, and start building hypotheses to test. Use tests to validate your tactics to recover traffic, then implement those validations across the larger spectrum of content.